In a tradition stretching back two years, Kiratech couldn’t miss DockerCon 2017 in Austin, always enthusiast of learning new things and hear to all the special announcements! Here below we show you the abstracts of the main topics discussed during the Day 1 and Day 2 Keynotes on April 18th-19th.

DAY 1 Keynote:

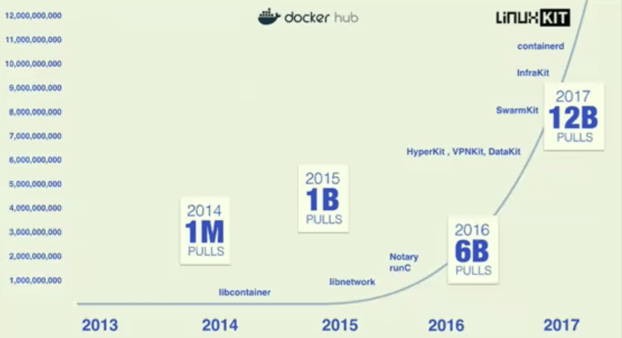

When the General Session started, Ben Golub, CEO of Docker, took the stage to show his presentation. He started with an overview of the first 4 years of Docker and of the differences between 3 years ago and now (since the first DockerCon). Docker usage in the world has impressively grown:

- The number of Docker hosts has arrived to 14 Million

- The Docker-ized apps are 900 K

- The images pulled from Docker Hub are 12 Billion.

Golub talked then about the Docker Open source project changes over the last 3 years, mentioning that the stakeholders and the nature of the project have progressively changed (anticipating a part of Solomon Hykes announcements).

He then thanked all the Docker Community members, the contributors, maintainers (you can see our DevOps Expert Lorenzo Fontana among them!!!) and mentors who support the Docker Project every day.

Solomon Hykes, CTO and founder of Docker, went on the stage: he focused his talk on reiterate the Docker mission: the world needed tools of mass innovation, and there are some rules that the “best tools” should be subject to. They should in fact:

- Get out of the way

- Adapt to you

- Make the powerful simple

And these features are also the basis of the difference between Docker Enterprise Edition and Community Edition.

Hykes underlined then how Docker strives to streamline and removes friction in the development cycle, following a three-step process:

- Developer complains about details.

- Docker fixes these details.

- Continuous repetitions of these 2 steps.

Hykes made also some examples of this process. The first example referred to container images that are too big: the answer to this process are multi-stage builds that, leveraging a single Dockerfile and a single build command, allow to create minimal run environments.

The second example is the difficulty in taking apps from the desktop to the cloud. The solution here is called Desktop to Cloud, which allows you to connect to Docker cloud-based swarms directly from the desktop UI and enables a built-in collaboration between Docker Cloud and Docker ID.

Are you looking for DevOps Consulting to face your Digital Transformation journey? Try 1 free hour of DevOps Consulting!

The focus then shifted on Docker for Operators. The first aspect that Hykes discussed is security, and how hard it is to move to production securely. In particular the challenges for a secure production are:

- Distributed system: the solutions for this issue could be “continue to use the same tools” or, better, use a secure orchestration. Docker has built, in order to enable this, the SWARMKIT, which is a tool for:

- Secure Node introduction: it allows a node to join to swarm

- Cryptographic Node Identity via Public Key infrastructure, for every single node that want to join

- MTLS between all nodes in your swarm

- Cluster segmentation

- Encrypted Networks

- Secure Secret distribution

- Diverse infrastructure and OS: this is a problem of portability. Here you can solve by restricting the choice of Operating Systems or, best choice, with a secure and portable orchestration.

- Developer choice: different frameworks, different applications, different databases…all this freedom of choice creates challenges to security. You can solve this by giving developers less choice or, better, by a secure, portable and usable orchestration.

Hykes pointed out that “a platform is only as secure as its weakest component”; this means that you have to worry about every component, and according to Hykes that’s exactly what Docker has been doing over the last year. Hykes discussed the work that Docker Inc. has done going on the different “Docker for X” platforms. Not every platform provides in fact a Linux subsystem, so Docker needed to work with other companies to build a secure, lean and portable Linux subsystem. And all this work led to LinuxKit:

- A SECURE Linux subsystem that works only with containers, that is an incubator for security innovations, built using a community-first security process.

- A LEAN Linux subsystem with a minimal size and a minimal boot time and where all the system services are containers.

- A PORTABLE Linux subsystem that runs everywhere.

Other companies that contribute to LinuxKit are IBM, Microsoft, Intel, Hewlett Packard Enterprise, and the Linux Foundation. At this point Solomon, underlying that it’s 3 years that Docker and Microsoft are working together, welcomed on the stage John Gossman, Azure Architect at Microsoft.

John focalized his talk first on Hyper-V Isolation, created in order to connect Virtual Machines to Containers (in particular it supports Windows-based containers), and then he announced the following step: Docker and Microsoft have worked together to solve the need of insert a Linux container with Linux Kernel on Windows, launching also Hyper-V Hypervisor.

Hykes re-takes the stage, and announced the live open source of LinuxKit on the stage: he made the LinuxKit repository on GitHub finally public.

Solomon started then talking a bit about the container ecosystem and the related Docker’s commitment. He believes that “if the container ecosystem succeeds, Docker succeeds”. He gave an overview of the evolution of Docker’s participation in the container ecosystem growth over the last few years: in 2013 Docker adopted a pioneer model with the standardization of applications distribution and known 10s of projects, 100s of contributors 1.000s of deployments and 0-100M of hub pulls; in 2015, with cloud native apps on Linux server the ecosystem and a production model made by “open components”, it grew to 100s projects, 1.000s contributors and 10.000s of deployments. The Components ecosystem is growing every day and Containers are spreading to every category of computing – so we can say that the Container ecosystem “goes mainstream”, and it needs to scale.

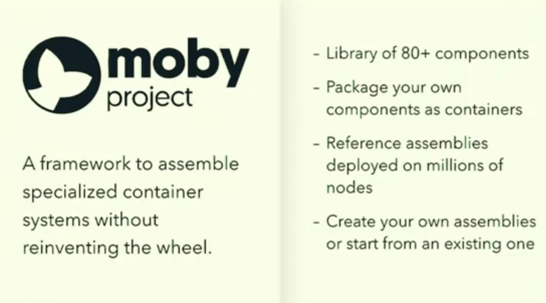

The solution is to assembly all Docker Project components into open source themselves. The result is the Moby project. The Moby project is a framework to assemble specialized container systems without reinventing the wheel. It’s a library of 80+ components that you can use and re-use with your own assemblies.

The project has reference assemblies that you can use unmodified, or that you can build upon or customize to create your own custom container systems. Docker will use Moby for all of its own open source development (for collaborators, contributors, etc..). Moby will be an open place community-run and will leverage a governance model inspired by other successful projects (like Fedora). Moby is designed to work well with existing projects; there’s no requirement to donate code to Moby.

So what does Moby mean to you? According to Hykes:

- If you’re a Docker user: nothing will change, only Docker will better leverage the ecosystem to innovate faster for you.

- If you’re a system build: Moby will help you innovate and collaborate more efficiently.

Briefly, Moby transforms multi-months R&D projects into Weekend projects.

Then Solomon made few examples of long projects that become Weekend projects using Moby:

- Building a locked-down Linux system with remote attestation

- Leveraging this locked-down Linux system to create a custom CI/CD stack

- Rebuilding the custom CI/CD stack using Debian instead of LinuxKit and Terraform instead of InfraKit

Following up on this discussion, Hykes invited Rolf Neugebauer to help him demonstrating the flexibility of Moby. Rolf made 2 demos, the first on a single system and the second on distributed systems, getting them distributed in the cloud. He demonstrated that with Moby you can collaborate to assembly and get the ecosystem to the next level.

With the end of the demo, Hykes closed the Day 1 Keynote.

DAY 2 Keynote:

Ben Golub went on the stage to kick off the day 2 general session of DockerCon 2017. He started reviewing some proposals for Docker logos, announcing an imminent changing in Docker Logo.

Then he focused his talk on Docker for enterprises and he made an overview of the most famous Docker customers. According to results of a research made by ETR, Docker appears “off the charts” in terms of adoption and market penetration within the enterprise. Golub sustains the bi-modal IT structure model, saying that Docker’s customers need can be synthetized with the word speed, that means moving faster through the future.

Introducing the great benefits and improvements that customers can see approaching Docker, both from a microservices-first architecture and from a traditional applications architecture, Golub stated that Docker enables you to adopt future technologies more easily and to protect investments in older technologies. To do this, Docker needs to be diverse—supporting different platforms and different environments.

At this point, Golub welcomed Swamy Kocherlakota from Visa on the stage. Kocherlakota, after displaying some Visa numbers and strategies – based on making electronic payments accessible to everyone and everywhere, on driving innovation through open platforms, on deploying fast and on having a “just in time” infrastructure - started discussing Visa technical approach. He showed how virtualization was inefficient and caused over the last years to Visa long infrastructure provisioning times, patching and maintenance and big infrastructure footprints. With Docker and Microservices Visa wanted to break this pattern and to simplify deployment improving developer productivity and standardizing the way of packaging and deploying apps. The idea is to try to move all developers and application groups to a “container-first” microservices-based architecture (called also Tetris-like infrastructure) deploying granularity, memory footprint, load balancing and operationalization.

Golub resumed some Docker “lessons learned” over the years:

- Start with a secure base (that embraces diversity)

- Secure the whole supply chain (and keep that diversity): security should be usable, portable and there should be trusted delivery through the Container App lifecycle workflow.

- Leverage an ecosystem (that supports diversity)

This focus on diversity can be linked to the efforts thatDocker is spending in guarantee security on the various Docker Editions (Docker for Mac, Docker for Windows, Docker for AWS, etc.), and to CaaS (Containers-as-a-Service).

Golub reviewed then the announcement regarding the Docker Store and certified third-party container images, taking into consideration in particular Docker Enterprise Edition, that includes Docker for AWS, Docker for Azure, etc..

He mentioned the major expansions of Docker Editions this week, that include: Docker running on z Systems and Power Series, and Docker for GCP. Docker, being on every major cloud in the US, is also making a partnership with Alibaba Cloud to provide Docker in Alibaba’s public cloud and in Alibaba’s private cloud offering (Aspara Stack). Consider that more than 60% of the 20 billion dollars daily transactions in the world happen via Alibaba, and Alibaba’s platform is built on Docker.

Lily Guo, Software Engineer at Docker, and Vivek Saraswat, Senior product manager at Docker, went on the stage to demo deploying certified third-party applications via Docker. The demo showed how to convert different VM into containers. The demo was the chance to announce also third-party applications (in particular Oracle ones) available now on the Docker Store.

At this point, Golub invited Mark Cavage from Oracle to talk a bit more about Oracle’s decision to put some Oracle software products (like Oracle Database, Oracle WebLogic, Oracle Coherence, Oracle Linux, and Java) available on the Docker Store. The idea of Oracle was to satisfy all the developers who think “..if it’s not on Docker I won’t download it” and to make these products usable by as much people as possible. Moreover the Docker Store is a guaranty of quality.

Golub came back on the stage, and started talking about the effort Docker is putting to modernize traditional applications program in order to accelerate portability, security and efficiency for existing apps, without modifying secure codes. This program in particular is sustained by reliable key partners like Avanade, Microsoft, Cisco, and HPE.

Golub introduced another use case: he called Aaron Ades to talk, who’s system engineer at MetLife, the holding corporation for the Metropolitan Life Insurance Company. Aaron expressed how hard is working with legacy, in particular during digital revolution and dealing with different customers’ needs. Thanks to Docker and DevOps, that changed the company culture, MetLife was able to react and:

- Wrap the system into microsystem

- Tap the data

- Scrap the apps.

With Docker and Microservices they moved workloads easily to Azure, consolidated virtual machines and made a massive operational leverage. They demonstrated in this way that “Even old elephants can dance”.

At the end Ben thanked all and closed the day 2 keynote.

Thanks to all people who will read this article; we invite you to leave some comments or message if you enjoyed it! And…see you at the Next DockerCon!